Executive Program on Instructional Course Design & Educational Technology

This program is aimed at executives and educators who wish to hone their skills in course design and technological applications.

How to Lead Successfully in Course Creation & Design

Dates: Custom Tailored, Based on Your Needs

Time: Mondays from 6-8 PM EST

Location: GWU, Duques 155

Objective: Help Empower Business Leaders and Educators to Lead Successfully in Course Creation & Design.

Tentative Program

Week 8:

Today's Agenda:

RECAP: Canva Charts & Presentations

A- Power Point Presentations

B- Performance Objectives

C- Assessment Instruments

HW:

- Webpage on Instructional Strategy

- Webpage on Instructional Materials

A- Power Point Presentations

Alignment

Sample Presentation (pptx)

Sample Presentation (pdf)

Nada Salem is inviting you to a scheduled Zoom meeting.

Topic: GWU GROUP 3 Zoom Meeting

Time: Dec 4, 2024 06:00 PM Eastern Time (US and Canada)

Join Zoom Meeting

https://us06web.zoom.us/j/

Meeting ID: 845 0393 1964

Passcode: 955688

B- Performance Objectives

A performance objective is a detailed description of what students will be able to do when they complete a unit of instruction. (p.125). Performance objectives are also sometimes called behavioral objectives or instructional objectives.

Performance objectives are derived from the skills in the instructional analysis. One or more objectives should be written for each of the skills identified in the instructional analysis. For every unit of instruction that has a goal, there is a terminal objective (p.131). The terminal objective has the 3 components of a performance objective, but it describes the conditions for performing the goal at the end of the instruction.

For each objective we write, we need to have a specific assessment of that behavior (that must be observable!).

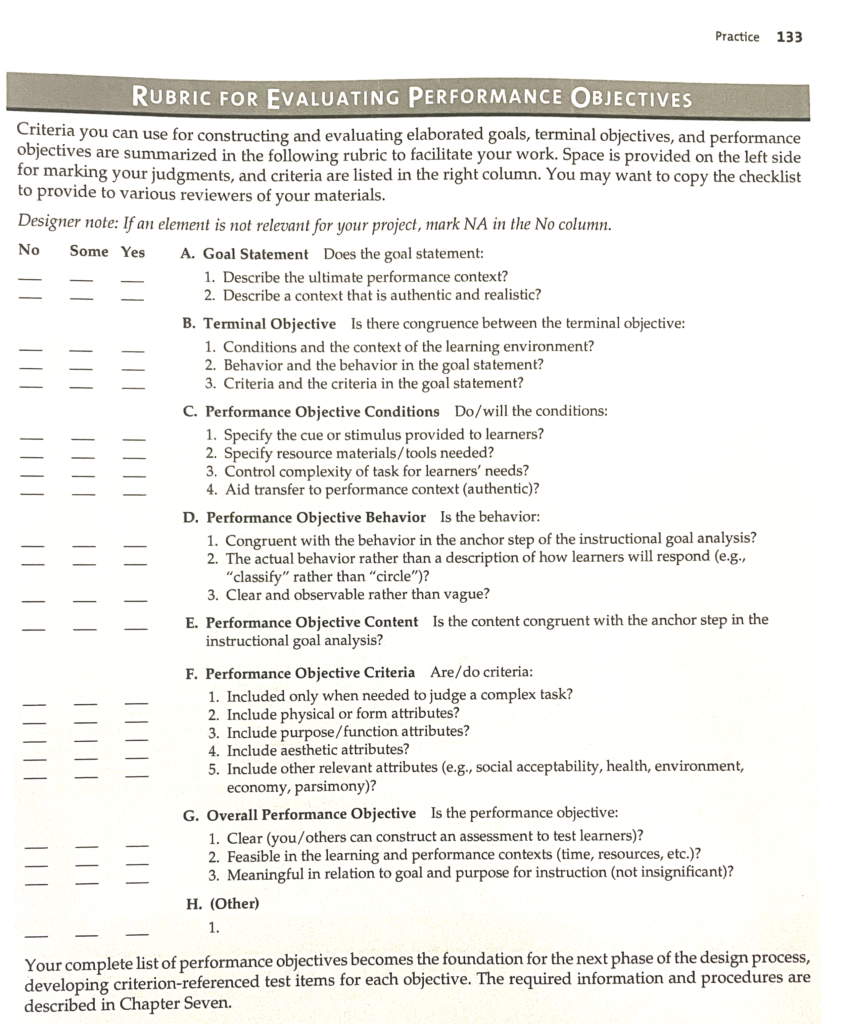

Important question to ask ourselves when writing objectives: (p.133)

"Could I design an item or task that indicates whether a learner can successfully do what is described in the objective?"

Components of an Objective:

1. Performance: A description of the skill or behavior identified in the instructional analysis. (What the learner will be able to do-- both action and content or concept)

2. Conditions: A description of the conditions that will prevail while a learner carries out the task > what the learner will be given/ what will be available to him/her (also check pp. 127-129)

- computer to use?

- calculator?

- paragraph to analyze?

- story to read?

- content?

- from memory?

3. Criteria: A description of the measures, or standards that will be used to evaluate learner performance (also check pp. 130-131)

-

- the tolerance limits for a response

- range

- qualitative judgment (what needs to be included in an answer or physical performance judged to be acceptable)

- given time period/circumstance

- use checklist of behavior types / rubrics: to define complex criteria for acceptable responses.

The steps in writing performance objectives: (p.132)

- Edit goal to reflect eventual performance context.

- Write terminal objective to reflect context of learning environment.

- Write objectives for each step in goal analysis for which there are no substeps shown.

- Write objectives that reflect the substeps in one major objective, or write objectives for each substep.

- Write objectives for all subordinate skills.

- Write objectives for entry behaviors if some students are likely not to possess them.

Some important guidelines for writing performance objectives:

- Always state objectives from the point of view of the learner (i.e., what the learner/trainee will be able to do) NOT from the point of view of the instructor (i.e., what the teacher/trainer will teach)

- Always include at least the three components (conditions, performance, criteria) that a Mager Type objective calls for (please read on below for more information...)

- Make sure the Performance part of your objective is observable

- Make sure the standards (criteria) are measurable, clear and not open for interpretation

- Do not confuse conditions and standards

- Take conditions into consideration when determining realistic criteria

Relevant Links:

Example of Performance Objectives

Mission, Goals, Objectives (Bloom's Taxonomy)

Relevant Book:

Preparing Instructional Objectives: A Critical Tool in the Development of Effective Instruction by Robert F. Mager (1977)

C- Assessment Instruments

A- Criterion-referenced instruments

B- Student work samples

C- Student Narratives

D- Performance Standards and Assessment Rubrics

A- Criterion or objective-referenced assessment instruments:

(Learner-Centered) p145

> Assessment that enhances student learning (Baron, 1998)

Criterion-referenced assessments are

- linked to instructional goals

- linked to objectives derived from goals

The purpose of this type of assessment is to evaluate:

- students' progress: they enable learners to reflect on their own performances > they will become ultimately responsible for the quality of their work

- instructional quality: they indicate to the designer which components of the instruction went well and which need revision

+ it contains a criterion that specifies how well a student must perform the skill in order to master the objective.

>Therefore, performance criteria should be congruent with the objectives, learners, and context.

The performance required in the objective must match the performance required in the test. (p 146)

Two uses of criterion-referenced tests:

- pretests: they have 2 goals

- to verify that the student possesses the anticipated entry behaviors

- to measure the student's knowledge of what is to be taught.

- posttests: they are used primarily to measure the student's knowledge of what was taught.

Types of Criterion-Referenced Tests & Their Uses (p.146)

a- Entry behaviors test: It is given to learners before they begin instruction in order to assess those learners' mastery of prerequisite skills.

This entry behavior test should cover the skills that are more questionable than others in terms of being already mastered by the target population.

From entry behaviors test scores designers decide whether learners are ready to begin the instruction.b- Pretest: It is given to learners before they begin instruction in order to determine whether they have previously mastered some or all of the skills that are to be included in the instruction.

If all the skills have been mastered, then the instruction is not needed.

The pretest includes one or more items for key skills identified in the instructional analysis, including the instructional goal.

Since both entry behaviors tests and pretests are administered prior to instruction, they are often combined into one instrument.

From pretest scores designers decide whether the instruction would be too elementary for the learners and, if not too elementary, how to develop instruction most efficiently for a particular group.

A pretest is valuable only when it is likely that some of the learners will have partial knowledge of the content.c- Practice/rehearsal tests: They are focused at the lesson rather on the unit level. The purpose for practice tests is to:

- provide active learner participation

- enable learners to rehearse new knowledge and skills

- enable learners to judge for themselves their level of understanding and skill

- enable the professors to provide corrective feedback

- enable the professors to monitor the pace of instructiond- Posttests: They are administered following instruction, and they are parallel to pretests (excluding entry behaviors items).

Posttests measure objectives included in the instruction. They assess all of the objectives, and especially focus on the terminal objective

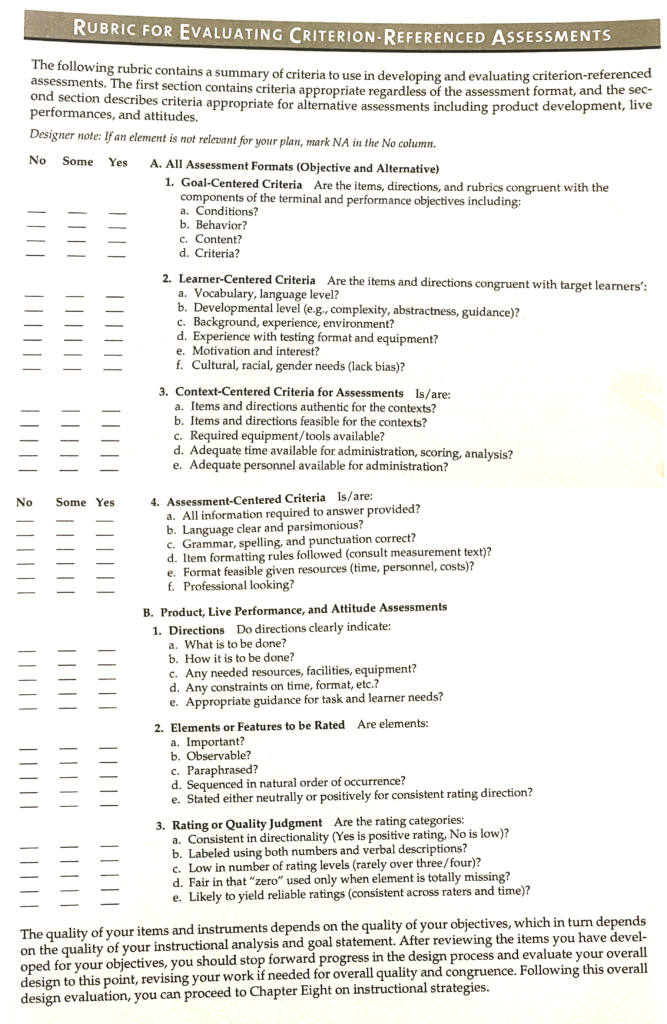

Designing a Test (p.149)

A criterion-referenced test is designed by matching the learning domain with an item or assessment task type.

a- Objectives in the Verbal Information Domain: they require objective-style test items which include the following formats:

- short answer

- alternative response

- matching

- multiple-choice items

b- Objectives in the Intellectual Skills Domain: they require one of the following:

- objective-style test items

- the creation of a product (essay, research paper...)

- a live performance of some type (act in a play, make a presentation, conduct a business meeting...)

If an objective requires the learner to create a unique solution or product, it would be necessary to

- write directions for the learner to follow

- establish a set of criteria for judging response quality

- convert the criteria into a checklist or rating scale (rubric) that can be used to assess those products

c- Objectives in the Affective/Attitudinal Domain: They are concerned with the learner's attitudes or preferences. Items for attitudinal objectives require one or both of the following:

- that the learners state their preferences

- that the instructor observes the learners' behavior and infers their attitudes from their actions

d- Objectives in the Psychomotor Domain: They are usually sets of directions on how to demonstrate the tasks and require the learner to perform a sequence of steps that represents the instructional goal. Criteria for acceptable performances need to be identified and converted into a checklist or rating scale that the instructor uses to indicate whether each step is executed properly.

Writing Test Items (p. 151)

There are 4 categories of criteria that should be considered when creating test items:

1- Goal-Centered Criteria: The test items and assessment tasks should be congruent with the terminal and performance objectives; they should provide learners with the opportunity to meet the criteria necessary to demonstrate mastery of an objective.

No rule states that performance criteria should or should not be provided to learners. Sometimes it is necessary for they to know performance criteria and sometimes it is not.

(It is very beneficial for the learner to receive the criteria before s/he is given the test; this will help them to prepare for it much more efficiently)

2- Learner-Centered Criteria: The test items and assessment tasks must be tailored to the characteristics and needs of the learners. Criteria in this area include considerations such as learners'

-

- vocabulary and language levels

- developmental levels (for setting task complexity)

- motivational and interest levels

- experiences and backgrounds

- special needs

Designers should consider how to aid learners in becoming evaluators of their own work and performances. Self-evaluation and self-refinement are two of the main goals of all instruction since they can lead to independent learning.

3- Context-Centered Criteria: The test items and assessment tasks must be as realistic or authentic to the actual performance setting as possible. This criterion helps to ensure transfer of the knowledge and skills from the learning to the performance environment.

4- Assessment-Centered Criteria: The test items and assessment tasks must include clearly written and parsimonious directions, resource materials, and questions + correct grammar, spelling, and punctuation!

To help ensure task clarity and to minimize learners' test anxiety, learners should be given all the necessary information to answer a question before they are asked to respond.

Items should not be written to trick learners! Ideally, learners should err because they do not possess the skill, not because the test item or assessment is convoluted and confusing!

Evaluating Tests and Test Items (p.156)

When writing test directions and test items, the designer should ensure the following:

- test directions are clear, simple, and easy to follow

- each test item is clear and conveys the intended information

- conditions under which responses are made are realistic

- the response methods are clear to learners

- appropriate space, time, and equipment are available

Using Portfolio Assessments (p. 162)

Portfolios are collections of criterion-referenced assessments that illustrate learners' work. These assessments might include:

- objective-style tests that demonstrate progress from the pretest to the posttest

- products that learners developed during instruction

- live performances

- assessments of learners' attitudes about the domain studied or the instruction

There are several features of quality portfolio assessment:

- the work samples must be anchored to specific instructional goals and performance objectives

- the work samples should be the criterion-referenced assessments collected during instruction (the pretests and posttests)

- each assessment is accompanied by its rubric with a student's responses evaluated and scored, indicating the strengths and problems within a performance.

The assessment of growth is accomplished at two levels:

- learner self-assessment: learners examine their own materials and record their judgments about the strengths and problems + what they might do to improve the materials

- instructor assessment: instructors examine the materials set without examining the evaluations by the learner, and record their judgments.

Then both the learner and the instructor compare their evaluations , discussing any discrepancies between the two evaluations.

As a result, they plan together next steps the learner should undertake to improve the quality of his/her work.

B- Student work samples:

- written reports

- rough drafts

- notes

- revisions

- projects

- Journals

- peer reviews

- self-evaluations

- anecdotal records

- class projects

- reflective writings

- artwork; graphics

- photographs

- exams

- computer programs

- presentations

C- Student Narratives: A narrative description that

- states goals

- describes efforts

- explains work samples

- reflects on experience.

D- Performance Standards and Assessment Rubrics:

A variety of scoring criteria can be used in developing performance standards or assessment rubrics. Standards and rubrics should include:

- performance levels (a range of various criterion levels)

- descriptors (standards for excellence for specified performance levels)

- scale (range of values used at each performance level)

Examples of Rubric:

- analytic assessment rubric

- holistic assessment rubric: it provides a general score for a compilation of work samples rather than individual scores for specific work samples

Relevant Links:

Main Textbook:

- "The Systematic Design of Instruction" by Dick, Carey & Carey (Digital)

Additional Textbooks:

- "Understanding by Design" by Wiggins & McTighe

- "50 Strategies to Boost Cognitive Engagement" by Rebecca Stobaugh

- "Teaching Students to Drive Their Brains" by Wilson & Conyers

- Sample pdf

- Sample PPT

TERMS OF SERVICE: We reserve the right, in our sole discretion, to reject or remove anyone who has registered or gained access under false pretenses, provided false information, or for any other action or behavior that we deem inappropriate, before, during or after the sessions, with or without prior notice or explanation, and without liability.

IMPORTANT DISCLAIMER: All rights reserved. The information on this page shall be considered proprietary information not to be used, copied or reproduced in any way. No part of our presentations / workshops / webinars may be reproduced, distributed, or transmitted in any form or by any means, including photocopying, recording, or other electronic or mechanical methods.